Yiding Jiang

yd <last name> at cmu dot edu

I am a PhD student at the Machine Learning Department of Carnegie Mellon University, where I work with Professor Zico Kolter. My research is supported by the Google PhD Fellowship.

Previously, I was an AI Resident at Google Research. I obtained my B.S. in Electrical Engineering and Computer Science at UC Berkeley, where I worked on robotics and generative models with Professor Ken Goldberg . I have also spent time as a research intern at Meta AI Research and Cerebras Systems.

Research interests

I am interested in understanding the science of deep learning, and using the insights to improve the models further. My research spans a wide range of topics including representation learning, reinforcement learning, and generalization — both concrete generalization bounds and less well-understood empirical phenomena like out-of-distribution and zero-shot generalization.

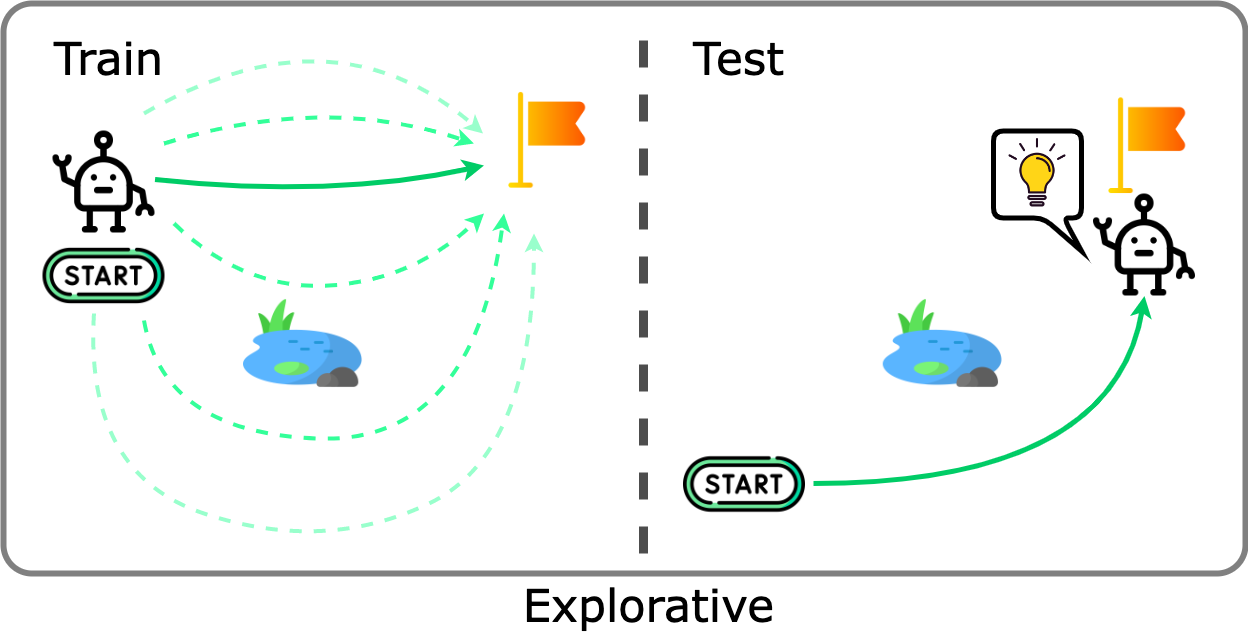

One of my current interests is to identify unique structural properties of real-world data that facilitate deep learning. I am also interested in studying exploration as a mechanism to improve generalization by driving models to acquire diverse, informative data and adapt to dynamic environments.

Selected works

(full publication list)* indicates equal contribution

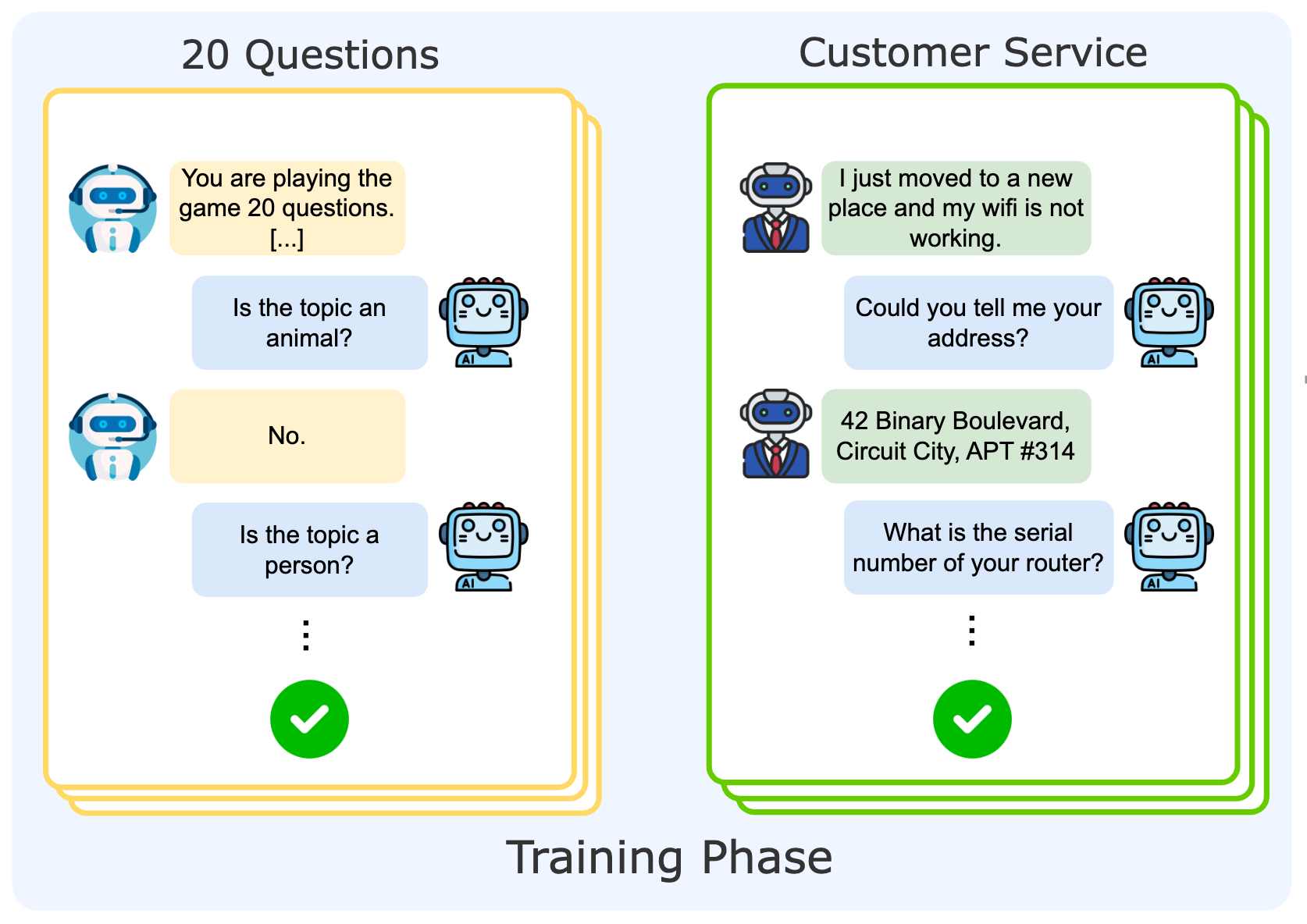

Fahim Tajwar*, Yiding Jiang*, Abitha Thankaraj, Sumaita Sadia Rahman, J. Zico Kolter, Jeff Schneider, Ruslan Salakhutdinov

ICML, 2025 (oral) | [Website] [code] [SFT dataset] [DPO dataset]

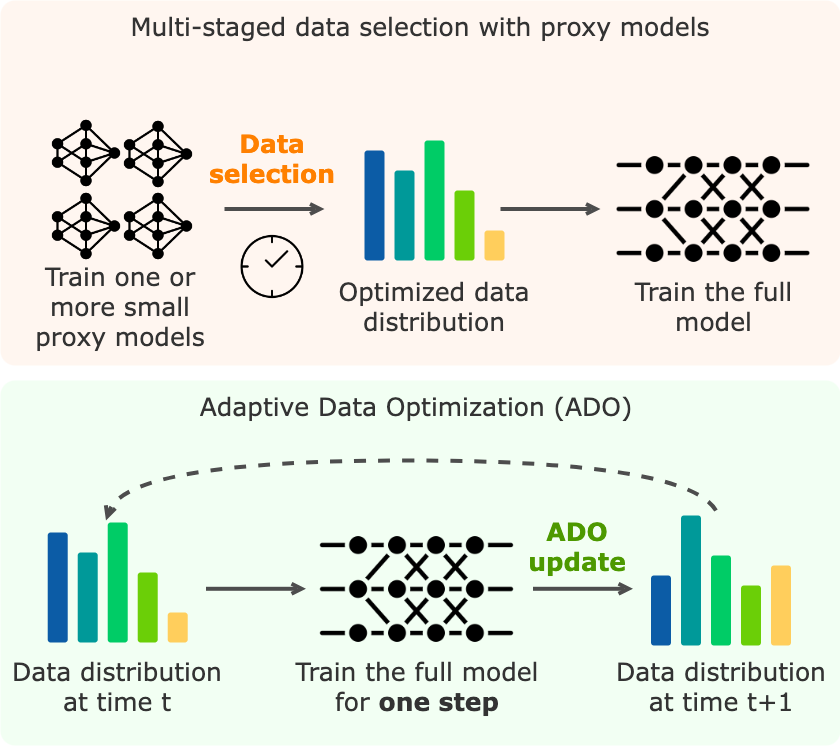

Yiding Jiang*, Allan Zhou*, Zhili Feng, Sadhika Malladi, J. Zico Kolter

ICLR, 2025 | [code] [blog post]

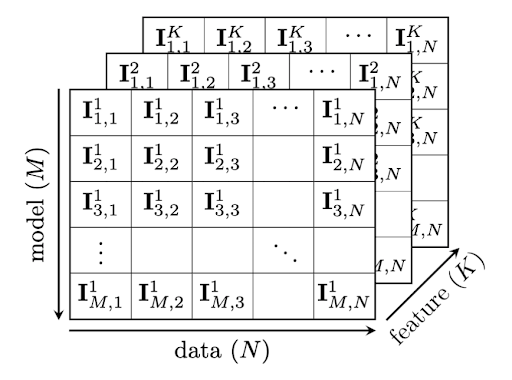

Yiding Jiang, Christina Baek, J. Zico Kolter

ICLR, 2024 (oral)

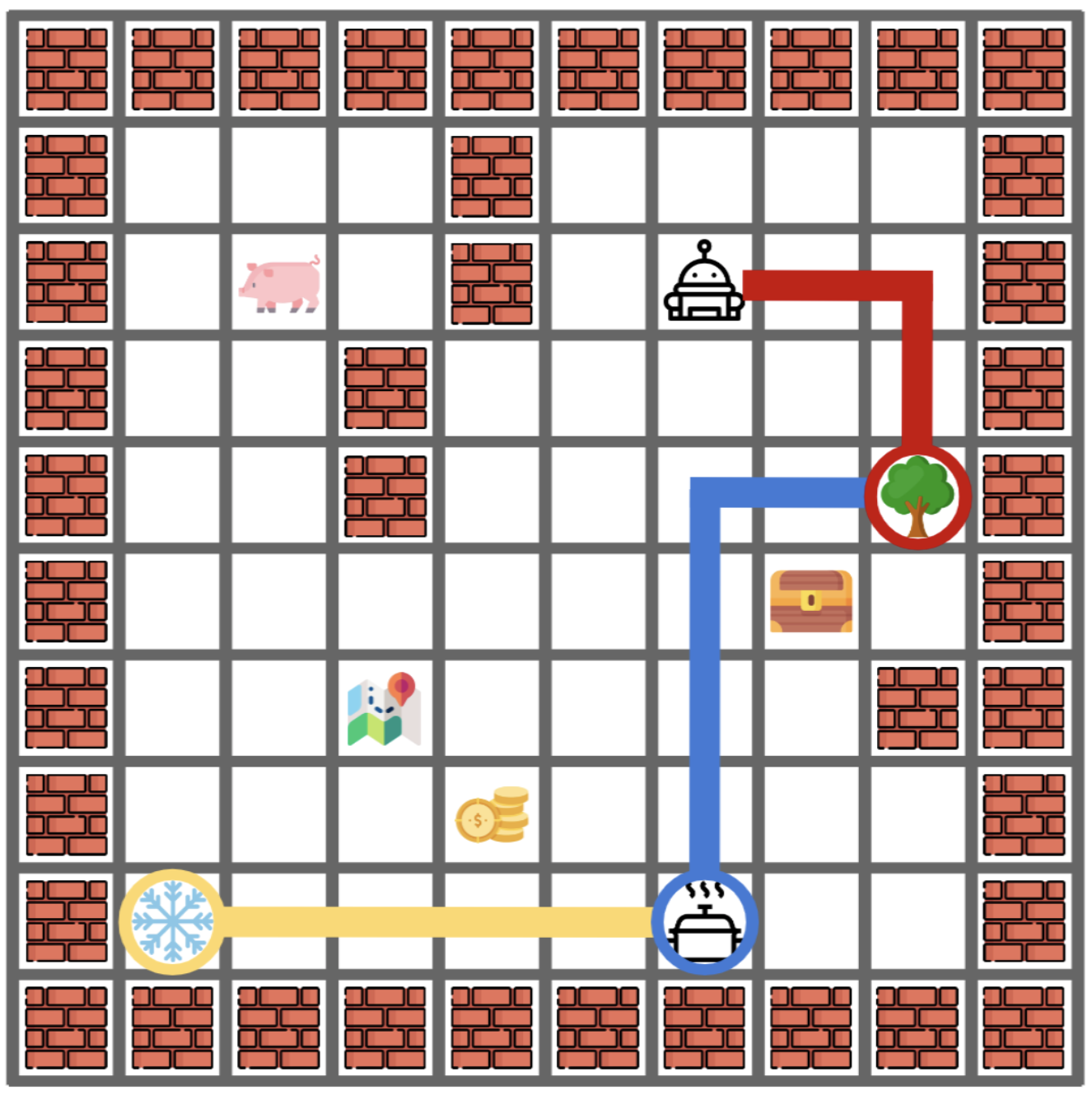

Yiding Jiang, J. Zico Kolter, Roberta Raileanu

NeurIPS, 2023 | [code]

Yiding Jiang*, Evan Z. Liu*, Benjamin Eysenbach, J. Zico Kolter, Chelsea Finn

NeurIPS, 2022 | [code]

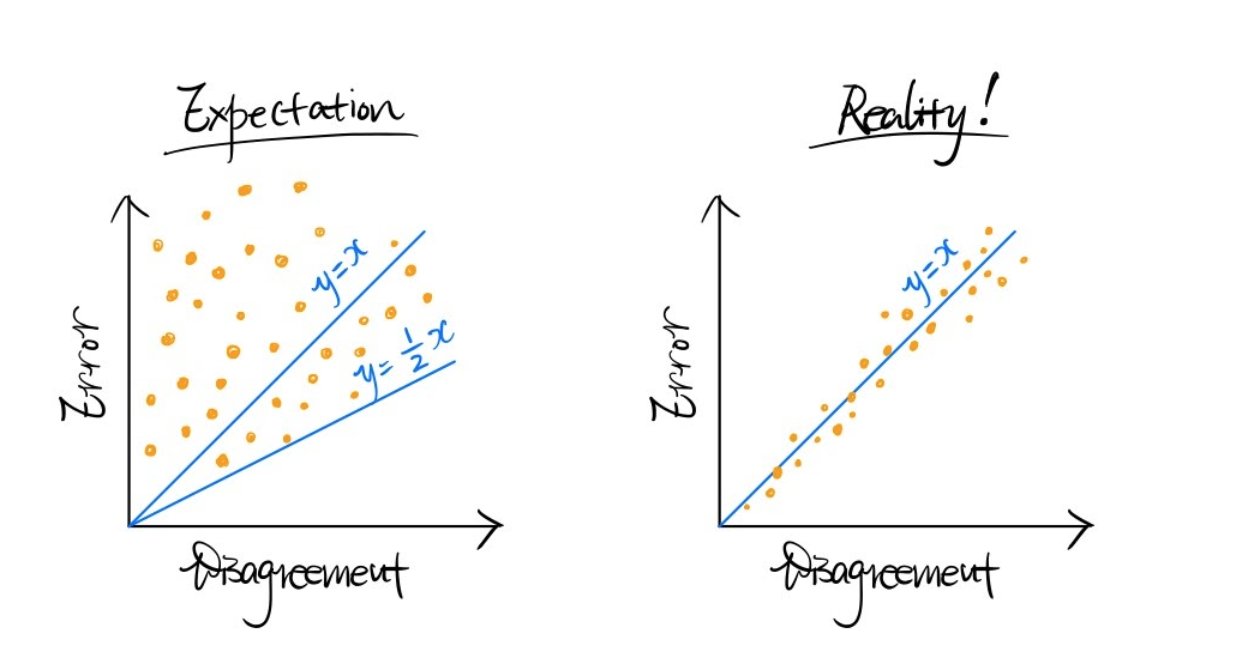

Yiding Jiang*, Vaishnavh Nagarajan*, Christina Baek, J. Zico Kolter

ICLR, 2022 (spotlight) | [blog post]

Yiding Jiang*, Behnam Neyshabur*, Hossein Mobahi, Dilip Krishnan, Samy Bengio

ICLR, 2020

"Science meets the Engineering of Deep Learning" workshop, NeurIPS 2019 (oral)

Yiding Jiang, Shixiang Gu, Kevin Murphy, Chelsea Finn

NeurIPS, 2019 | [project page] [environment]

Teaching

- Teaching Assistant, 10-708 Probabilistic Graphical Models. Carnegie Mellon University. Fall 2022.

- Teaching Assistant, 10-725 Convex Optimization. Carnegie Mellon University. Fall 2021.

- Reader, CS170 Efficient Algorithms and Intractable Problems. UC Berkeley. Fall 2017.

Updated May 2025. Template is adapted from here.